- Published on

CI/CD Pipeline for Front-End with CodeBuild and Serverless Framework

Part 4 of "Deploy Static Website To AWS"

Table of Contents

- Overview

- The Problem

- The Goal

- Pre-Requisites

- Resources

- Creating the Front-End CodeBuild Project

- Pipeline Settings Chosen in this Example

- Steps

- 0. Cleanup serverless.yml

- 1. Create and Format the Buildspec File

- 2. Add the targetBranch variables to the .config/env.json file

- 3. Confiture the CodeBuild Project in the serverless.yml

- 4. Configure the Project's Service IAM Role and Policies in the serverless.yml

- 5. Update the Bucket Policy to Accept CodeBuild Projects

- 6. Deploy the code to create the pipeline

- 7. Test

- 8. Restrict GitHub permissions

- What's Next

Overview

The Problem

The front-end static application was created and deployed in the first part of this blog series. Although there has been quite a few improvements to the content delivery and AWS infrastructure deployment, no change has been made to how the static files reach the S3 bucket. Currently, the process is to make any changes to the Next.js code or markdown blog posts, build/compile the code into the static files, and finally copy those static files to the S3 bucket.

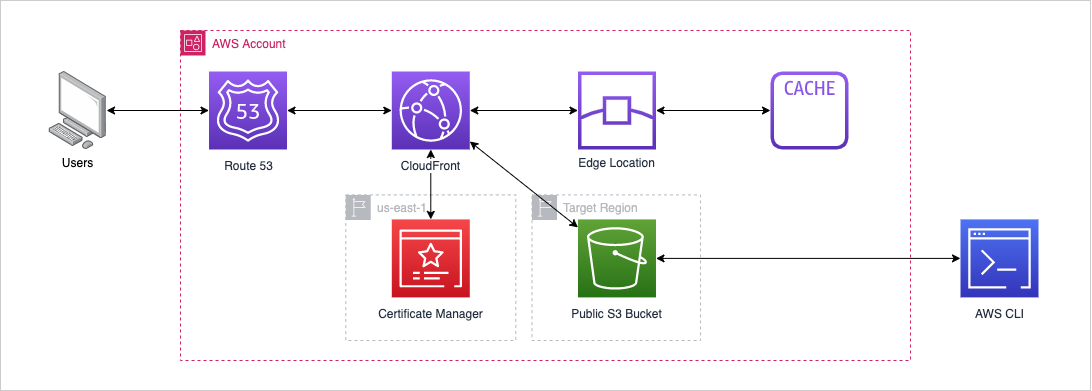

A reminder of the current architecture:

Although we have managed to reduce the amount of work required to set-up and manage individual resources, the developer still needs to maintain and manually deploy the infrastructure stack when changes occur, build and copy the appropriate static files to the destination bucket, and manage the different versions code branches and changes across multiple environments. This approach means that developers require constant access to the develop and prod AWS accounts via the CLI or Management Console in order to update a minor feature.

The Goal

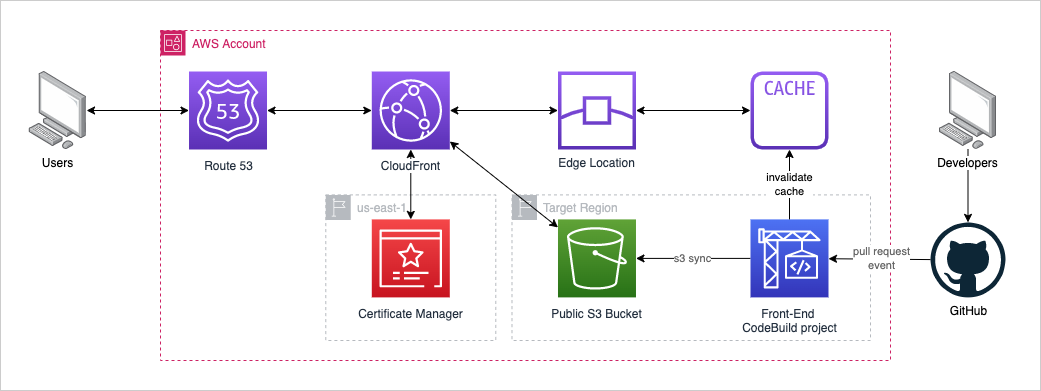

This addition to the series aims to remove any need to directly access the AWS account via the CLI or management console when updating the front-end. This can be achieved by listening for merged pull requests in specific branches of the GitHub repo, and triggering the build process when a matching event is received.

It may not look like much of a change when comparing the diagrams, however the update allows the developer to just manage the code base in GitHub which will trigger builds based on the configured events. This is the first step to limiting all direct interaction with the front-end resources or AWS account in order to update the front-end. In addition to limiting the interaction with the AWS account and resources, the GitHub account can be used to restrict the actions on the account:

- Set up thresholds to the number of approvals before merge requests can be merged

- Limit direct commits to specific branches (ie release branches that can only be updated via pull requests)

- Limit who can approve or merge

- etc

Pre-Requisites

- An AWS account

- AWS CLI installed and configured

- Completed Part 3A (or 3A and 3B if some resources needed to be imported)

- A GitHub account and project containing the static site

- GitHub account connected with CodeBuild. See this documentation for more information.

- From the documentation: "Use the AWS CodeBuild console to start creating a build project. When you use the console to connect (or reconnect) with GitHub, on the GitHub Authorize application page, for Organization access, choose Request access next to each repository you want to allow AWS CodeBuild to have access to, and then choose Authorize application. (After you have connected to your GitHub account, you do not need to finish creating the build project. You can leave the AWS CodeBuild console.) To instruct AWS CodeBuild to use this connection, in the source object, set the auth object's type value to OAUTH."

Resources

- AWS Documentation - AWS::CodeBuild::Project

- AWS Documentation - GitHub webhook events

- AWS Documentation - AWS::CodeBuild::Project Source

Creating the Front-End CodeBuild Project

This can be done via the AWS CLI, Management Console, or via a CloudFormation deployment. This example follows the management of the pipeline via a CloudFormation stack using Serverless Framework. This methodology is chosen as the pipeline (which is being create and tested for the dev environment) will be updated to add additional triggers which will need to be deployed and managed across multiple environments.

Note: Creating the CodeBuild project via the AWS Management Console will abstract out some of the complexity by creating the service role and basic policies. This service role will still need to be updated to provide access for any operations performed by the buildspec. In the example, the PutObject, DeleteObject, and ListBucket actions need to be allowed for the S3 bucket and the CreateInvalidation action will need to be allowed for the CloudFront distribution.

Pipeline Settings Chosen in this Example

| field | value |

|---|---|

| Project Name | sls-deploy-dev |

| Description | Builds front end static files and copies build files to dev endpoint dev.slsdeploy.com |

| Enable build badge | false |

| Enable concurrent build limit - optional | true |

| Concurrent build limit | 1 |

| Source provider | GitHub |

| Repository | Repository in my GitHub account |

| GitHub repository | https://github.com/USER/REPO.git |

| Source version - optional | release/develop |

| Rebuild every time a code change is pushed to this repository | true |

| Build type | Single build |

| Event type | PULL_REQUEST_MERGED |

| Environment image | Managed |

| Operating system | Ubuntu |

| Runtime(s) | Standard |

| Image | aws/codebuild/standard:6.0 |

| Image version | Always use the latest image for this runtime version |

| Environment type | Linux |

| Service role | New service role |

| Role name | codebuild-sls-deploy-dev-service-role |

| Build specifications | Insert build commands |

| Build commands | see below |

| Artifacts | No artifacts |

| CloudWatch logs - optional | true |

| Group name | slsdeploy.com/ |

| Stream name | dev |

| S3 logs - optional | false |

Steps

The steps followed to create the CodeBuild project are:

- Cleanup

serverless.yml - Create and format the buildspec file

- Add the

targetBranchvariables to the.config/env.jsonfile - Confiture the CodeBuild project in the

serverless.yml - Configure the project's service IAM role and policies in the

serverless.yml - Update the bucket policy to accept CodeBuild projects

- Deploy the code to create the pipeline

- Test

- Restrict GitHub permissions

0. Cleanup serverless.yml

With the addition of the build pipelines and the required IAM policies, the serverless.yml file is getting quite unmanageable. Serverless Framework supports the use of multiple resource configuration files. See this documentation for more information.

Create a separate files for the existing infrastructure, and another file with CodeBuild project configuration. In order to achieve this, each file needs to start with the Resources: section definition and the resources in the main file becomes an array:

# serverless.yml

...

resources:

- ${file(path/to/file.yml)}

- ${file(path/to/second/file.yml)}

- Resources:

...

# Add inline resources if needed

The file structure this example follows is:

.

├── .config/

│ └── env.json

├── .git/

├── .gitignore

├── .serverless/

│ ├── cloudformation-template-create-stack.json

│ ├── cloudformation-template-update-stack.json

│ └── serverless-state.json

├── resources/

│ ├── front-end-codebuild-resources.yml

│ └── infrastructure-resources.yml

└── serverless.yml

1. Create and Format the Buildspec File

The buildspec is where the all the build commands for the project will be housed. It will install all dependencies, build and export the static files before copying them to the destination S3 bucket. Lastly, the cache is invalidated.

Note: The s3 bucket name is related with a variable to make the CodeBuild project generic for

version: 0.2

phases:

pre_build:

commands:

- echo installing npm dependencies

- npm install

build:

commands:

- echo building latest env

- npm run build

- echo build successful

post_build:

commands:

- echo copying files

- aws s3 sync ./out s3://${self:custom.s3Bucket} --delete

- aws s3 cp --cache-control="max-age=0, no-cache, no-store, must-revalidate" ./out/index.html s3://${self:custom.s3Bucket}

- aws cloudfront create-invalidation --distribution-id --paths /index.html

- echo successfully copied files

Format the buildspec into a single line string by performing the following replacements:

- Replace newline with

\n - Replace double quite characters with

\"

version: 0.2\n\nphases:\n pre_build:\n commands:\n - echo installing npm dependencies\n - npm install\n build:\n commands:\n - echo building latest env\n - npm run build\n - echo build successful\n post_build:\n commands:\n - echo copying files\n - aws s3 sync ./out s3://${self:custom.s3Bucket} --delete\n - aws s3 cp --cache-control=\"max-age=0, no-cache, no-store, must-revalidate\" ./out/index.html s3://${self:custom.s3Bucket}\n - aws cloudfront create-invalidation --distribution-id DISTRIBUTION_ID --paths /index.html\n - echo successfully copied files

2. Add the targetBranch variables to the .config/env.json file

Add the target branch which will be the source of truth for the specific environment. For example for the dev environment, the target branch is release/develop:

// .config/env.json

{

"dev": {

"domainName": "dev.example.com",

"hostedZoneId": "HOSTED_ZONE_ID", // Found in: Route 53 > Hosted Zones > example.com

"hostedZone": "example.com",

"s3Bucket": "dev.example.com",

"certificateId": "CERTIFICATE_ID",

"aliases": [

"dev.example.com" // Note difference between dev an prod aliases. Aliases cannot be shared by multiple different distributions

],

"targetBranch": "release/develop" // ADD

},

"prod": {

"domainName": "example.com",

"hostedZoneId": "HOSTED_ZONE_ID", // Found in Route 53 > Hosted Zones > example.com

"hostedZone": "example.com",

"s3Bucket": "example.com",

"certificateId": "CERTIFICATE_ID", // Found in: AWS Certificate Manager > Certificates

"aliases": [

"example.com",

"www.example.com",

],

"targetBranch": "main" // ADD

}

}

Add the following custom environment variable in the serverless.yml:

# serverless.yml

custom:

...

targetBranch: ${self:custom.env.targetBranch}

...

3. Confiture the CodeBuild Project in the serverless.yml

In the resources section, add and edit the following:

# resources/front-end-codebuild-resources.yml

Resources:

...

CodeBuildProject:

Type: AWS::CodeBuild::Project

Properties:

Name: sls-deploy-${self:custom.stage}

Description: Builds front end static files and copies build files to dev endpoint ${self:custom.s3Bucket}

ConcurrentBuildLimit: 1

Environment:

ComputeType: BUILD_GENERAL1_SMALL

Image: aws/codebuild/standard:6.0

Type: LINUX_CONTAINER

LogsConfig:

CloudWatchLogs:

GroupName: slsdeploy.com/

Status: ENABLED

StreamName: ${self:custom.stage}

ServiceRole: !Ref BuildPipelineServiceRole

Source:

BuildSpec:

Fn::Join:

- ""

- - "version: 0.2\n\nphases:\n pre_build:\n commands:\n - echo installing npm dependencies\n - npm install\n build:\n commands:\n - echo building latest env\n - npm run build\n - echo build successful\n post_build:\n commands:\n - echo copying files\n - aws s3 sync ./out s3://dev.slsdeploy.com --delete\n - aws s3 cp --cache-control=\"max-age=0, no-cache, no-store, must-revalidate\" ./out/index.html s3://dev.slsdeploy.com\n - aws cloudfront create-invalidation --distribution-id "

- !Ref WebAppCloudFrontDistribution

- " --paths /index.html\n - echo successfully copied files"

GitCloneDepth: 1

Location: https://github.com/daganherceg/sls-deploy.git

Type: GITHUB

SourceVersion: ${self:custom.targetBranch}

Triggers:

Webhook: true

FilterGroups:

- - Type: EVENT

Pattern: PULL_REQUEST_MERGED

- Type: BASE_REF

Pattern: ${self:custom.targetBranch}

Visibility: PRIVATE

Artifacts:

Type: NO_ARTIFACTS

4. Configure the Project's Service IAM Role and Policies in the serverless.yml

In the Resources section to the top of the # resources/front-end-codebuild-resources.yml file, add the following (no changes required unless the resource name BuildPipelineCodeBuildBasePolicy was changed):

# resources/front-end-codebuild-resources.yml

Resources:

BuildPipelineCodeBuildBasePolicy:

Type: AWS::IAM::Policy

Properties:

PolicyName: CodeBuildBasePolicy-sls-deploy-${self:custom.stage}-${aws:region}

Roles:

- !Ref BuildPipelineServiceRole

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- logs:*

Resource:

- arn:aws:logs:${aws:region}:${aws:accountId}:log-group:/sls-deploy-${self:custom.stage}

- arn:aws:logs:${aws:region}:${aws:accountId}:log-group:/sls-deploy-${self:custom.stage}/:*

- Effect: Allow

Action:

- s3:GetBucketAcl

- s3:PutObject

- s3:GetObject

- s3:GetBucketLocation

- s3:GetObjectVersion

Resource:

- arn:aws:s3:::codepipeline-${aws:region}-*

- Effect: Allow

Action:

- s3:PutObject

- s3:ListBucket

- s3:DeleteObject

Resource:

- arn:aws:s3:::${self:custom.s3Bucket}

- arn:aws:s3:::${self:custom.s3Bucket}/*

- Effect: Allow

Action: cloudfront:CreateInvalidation

Resource: !Sub

- 'arn:aws:cloudfront::${AWS::AccountId}:distribution/${DistributionId}'

- { DistributionId: !Ref WebAppCloudFrontDistribution }

BuildPipelineCodeBuildCloudWatchLogsPolicy:

Type: AWS::IAM::Policy

Properties:

PolicyName: CodeBuildCloudWatchLogsPolicy-sls-deploy-${self:custom.stage}-${aws:region}

Roles:

- !Ref BuildPipelineServiceRole

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- logs:CreateLogGroup

- logs:CreateLogStream

- logs:PutLogEvents

Resource:

- arn:aws:logs:${aws:region}:${aws:accountId}:log-group:slsdeploy.com/

- arn:aws:logs:${aws:region}:${aws:accountId}:log-group:slsdeploy.com/:*

BuildPipelineServiceRole:

Type: AWS::IAM::Role

Properties:

RoleName: codebuild-sls-deploy-${self:custom.stage}-service-role

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service:

- codebuild.amazonaws.com

Action: sts:AssumeRole

Policies:

- PolicyName: FrontEndBuildPipelinesCodeBuildPermissionsPolicy-sls-deploy-infra-${self:custom.stage}-${aws:region}

PolicyDocument:

Statement:

- Effect: Allow

Action:

- codebuild:*

Resource:

- arn:aws:codebuild:${aws:region}:${aws:accountId}:report-group/sls-deploy-${self:custom.stage}-*

5. Update the Bucket Policy to Accept CodeBuild Projects

Append the following statement to the existing static S3 bucket policy (WebAppS3BucketPolicy in this example):

# resources/infrastructure-resources.yml

Resources:

...

WebAppS3BucketPolicy:

...

Properties:

Statement:

...

- Sid: AllowCodeBuildActionsToS3

Effect: Allow

Action: '*'

Principal:

AWS: 'arn:aws:iam::${aws:accountId}:role/codebuild-sls-deploy-${self:custom.stage}-service-role'

Resource:

- arn:aws:s3:::${self:custom.s3Bucket}

- arn:aws:s3:::${self:custom.s3Bucket}/*

6. Deploy the code to create the pipeline

sls deploy --region ap-southeast-2 --stage dev --verbose

This will update the existing CloudFormation stack and add the following resources:

- CodeBuild project

- CodeBuild service IAM role

- Two IAM policies for service role

7. Test

Note: In order to test either trigger, the specific branch needs to have code pushed/merged - the branch is the source of truth and where the code will be downloaded from before building.

The pipeline can be triggered by starting a build from the AWS Management Console, or by merging a branch into our release/develop branches.

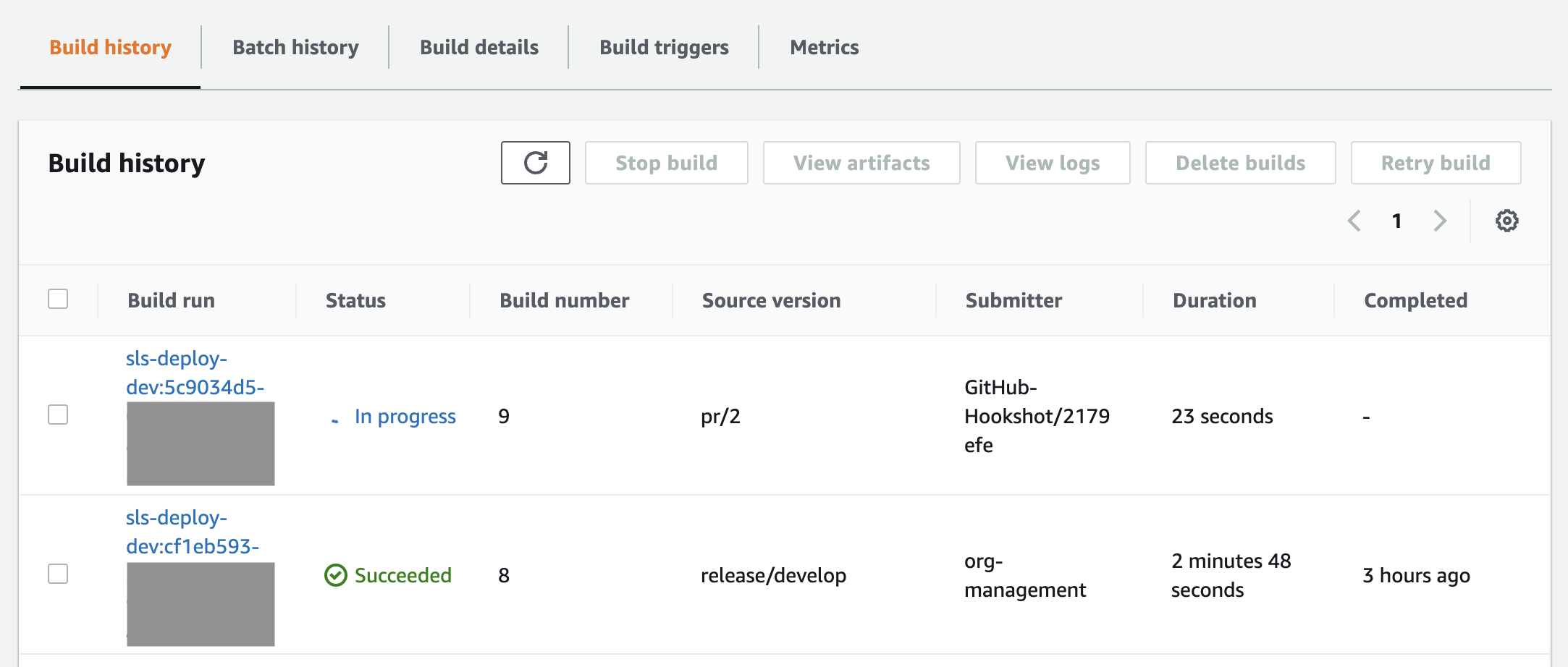

The above image shows two builds: build 8 which was triggered in the AWS Management Console and build 9 which was triggered after merging an existing PR.

8. Restrict GitHub permissions

Note: These are only enforceable for GitHub Team or Enterprise organization accounts

Example branch permissions enabled:

- Branch name pattern:

release/*,main - Require a pull request before merging: ensures that all code going to the branches are checked and approved by the target number of developers before any changes to the release or main branches. In order to make a change, the developer must push all of their work to a non-release or main branch and then create a pull-request

- Lock branch: to ensure the branch cannot be changed

What's Next

In the next post, the backend deployment strategy will be updated to take advantage of CodeBuild deployments. There are a few additional considerations for the infrastructure deployment via CodeBuild, such as:

- Config file: currently housed in local directory and do not want to include this in the git repo

- IAM permissions: need IAM permissions to exist in order to kick off first deployment which creates/manages the IAM permissions