- Published on

CI/CD Pipeline for Infrastructure with CodeBuild and Serverless Framework

Part 5 of "Deploy Static Website To AWS"

Table of Contents

- Overview

- The Problem

- The Goal

- The Target Architecture

- Limitations

- Creating the Infrastructure CodeBuild Resources

- Differences Between the Front-End and Infrastructure CodeBuild Projects

- Thoughts on Common Stack

- Create the Common Stack

- Deploy stack

- Create the Infrastructure Build Script

- Create the CodeBuild Project

- Deploy Steps

- Update the Buildspec

- Merge Code to Deploy

- [Troubleshooting] MalformedPolicy:

- What's Next

Overview

The Problem

Part 3 in this series, which focused on creating and managing resources via a template, had significantly reduced the complexity surrounding creating and managing different resources for environments and projects. With little setup, developers can quickly deploy the infrastructure and pipeline for front-end deployment, however the infrastructure deployment still needs to be kicked off and managed manually.

This manual deployment and management has the some drawbacks:

- Developers need to actively deploy each time code is committed which requires direct access via the AWS CLI

- Deployed versions can differ from the repo

- Can deploy from any branch

- Can deploy uncommitted code

- Developers can accidentally deploy to the wrong environment

- And so on

The Goal

Similar to the pipeline created in part 4 for the front-end, the infrastructure should have it's own deployment pipeline which is triggered certain changes to the GitHub repo.

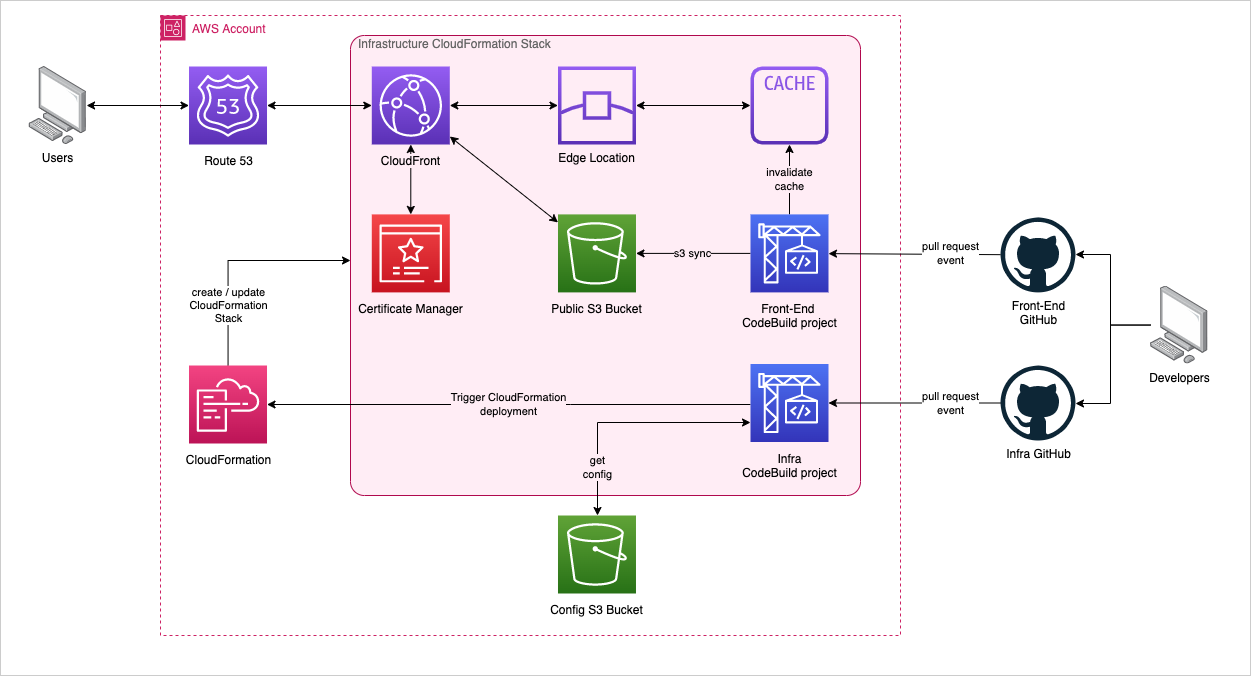

The Target Architecture

By the end of this post, the front-end and infrastructure will each have its own pipeline for each environment. Any merges from the GitHub repo into the target release branch will trigger the respective deployment for the target environment.

Limitations

The biggest downside of this approach is the amount of CodeBuild projects (ie pipelines) being created. This approach leads to a CodeBuild project for each and every deployed environment. In the series we have been targeting a development and a production environment which means a minimum of 4 pipelines. As of writing, CodeBuild costs $1 per active pipeline plus the usage on the pipeline. CodeBuild doesn't charge for any pipelines within the first 30 days of their creation to promote experimentation, but this is a substantial cost for our infrastructure. In a future post, we will explore reducing the amount of pipelines in a multi-environment landscape.

Creating the Infrastructure CodeBuild Resources

Differences Between the Front-End and Infrastructure CodeBuild Projects

Following on from part 4, and duplicating the front-end CodeBuild resources, there are only a few updates/considerations in order to get the new pipeline working:

- Config file

- Currently not being pushed to GitHub, lets keep it that way

- Opt to house the config file in an S3 bucket

- Create a common Stack, which includes:

- A common S3 bucket for the config file

- Allow all environments to use the common configuration

- In the future we will use this to house the blog posts allowing the separation between the blog posts and the Front-End changes

- Duplicate existing code pipeline

.ymlfile- Import the new infrastructure resources into the

serverless.ymlfile

- Import the new infrastructure resources into the

- New buildspec to deploy infrastructure

- Update Resource identifiers and names to differentiate between Infra and FE resources

- Update the references to the Infra resources

- Remove unnecessary IAM permissions in the policy documents

- No need to copy anything to the static files bucket

- No need to invalidate any cache

Thoughts on Common Stack

- We store one config object which contains the config for all environments, it may be better to create a separate config file for each env

- We will store the config as a document in S3. It is secure enough for our purposes

- We are not storing any passwords or anything sensitive

- Create a new 'operations' bucket for the config, it may seem like overkill but we will use this of more later

- Don't want to use the WebApp bucket as this is only for public content

- We could use the deployment bucket, but I like keeping the deployment bucket only for deployments and as more of a throwaway

- Set the bucket deletion policy to keep

- Deploy the bucket first to create the config bucket and file, then update the buildspec to copy the config from S3 into the local env

- In this example the policy document is set to allow all get operations from s3... this could probably be more restrictive

Create the Common Stack

Create new repo outside of your infrastructure and front-end repos:mkdir common-resources

Add below serverless.yml to create common S3 bucket and policy

# serverless.yml

service: sls-deploy-common # overwrites the stack name to remove the stage

frameworkVersion: '3'

provider:

name: aws

runtime: python3.8

stackName: sls-deploy-common

custom:

commonBucket: sls-deploy-common

resources:

Resources:

CommonS3Bucket:

Type: AWS::S3::Bucket

Properties:

BucketName: ${self:custom.commonBucket}

AccessControl: Private

CommonS3BucketPolicy:

Type: AWS::S3::BucketPolicy

Properties:

Bucket:

Ref: CommonS3Bucket

PolicyDocument:

Statement:

- Sid: AllowCloudFormationGetAccess

Effect: Allow

Principal:

Service: 'cloudformation.amazonaws.com'

Action:

- s3:GetObject

- s3:ListBucket

Resource:

- arn:aws:s3:::${self:custom.commonBucket}

- arn:aws:s3:::${self:custom.commonBucket}/*

Deploy stack

Note: no need to specify the environment as we are creating a stack which will be shared by all environmentssls deploy --region ap-southeast-2 --verbose

Copy config from local into bucketaws s3 cp ../infra/.config/env.json s3://sls-deploy-common/config/

Create the Infrastructure Build Script

- Needs to do:

- Copy the config from the common bucket

- Run the deploy command

version: 0.2

phases:

pre_build:

commands:

- echo installing serverless

- npm install -g serverless

post_build:

commands:

- echo copying config file to locally

- mkdir .config

- aws s3 cp s3://sls-deploy-common/config/env.json ./.config/

- echo config copying echo deploying serverless infrastructure

- sls deploy --region ${aws:region} --stage ${self:custom.stage} --verbose

- echo successfully deployed

Format the buildspec

version: 0.2\n\nphases:\n pre_build:\n commands:\n - echo installing serverless\n - npm install -g serverless\n post_build:\n commands:\n - echo copying config file to locally\n - mkdir .config\n - aws s3 cp s3://sls-deploy-common/config/env.json ./.config/\n - echo config copying echo deploying serverless infrastructure\n - sls deploy --region ${aws:region} --stage ${self:custom.stage} --verbose\n - echo successfully deployed

Create the CodeBuild Project

Similar to the front-end CodeBuild project, create the pipeline to deploy the infrastructure resources

# resources/infrastructure-codebuild-resources.yml`

Resources:

InfraBuildPipelineCodeBuildBasePolicy:

Type: AWS::IAM::Policy

Properties:

PolicyName: CodeBuildBasePolicy-sls-deploy-infra-${self:custom.stage}-${aws:region}

Roles:

- !Ref InfraBuildPipelineServiceRole

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- logs:*

Resource:

- arn:aws:logs:${aws:region}:${aws:accountId}:log-group:/sls-deploy-${self:custom.stage}-infra

- arn:aws:logs:${aws:region}:${aws:accountId}:log-group:/sls-deploy-${self:custom.stage}-infra/:*

- Effect: Allow

Action:

- s3:GetBucketAcl

- s3:PutObject

- s3:GetObject

- s3:GetBucketLocation

- s3:GetObjectVersion

Resource:

- arn:aws:s3:::codepipeline-${aws:region}-*

- Effect: Allow

Action:

- s3:GetObject

- s3:ListBucket

Resource:

- arn:aws:s3:::${self:custom.commonBucket}

- arn:aws:s3:::${self:custom.commonBucket}/*

- Effect: Allow

Action:

- cloudformation:*

Resource:

- arn:aws:cloudformation:${aws:region}:${aws:accountId}:stack/${self:custom.stackName}

- arn:aws:cloudformation:${aws:region}:${aws:accountId}:stack/${self:custom.stackName}/*

- Effect: Allow

Action: cloudformation:ValidateTemplate

Resource: '*'

- Effect: Allow

Action: s3:*

Resource:

- Fn::Join:

- ''

- - 'arn:aws:s3:::'

- Ref: ServerlessDeploymentBucket

- Fn::Join:

- ''

- - 'arn:aws:s3:::'

- Ref: ServerlessDeploymentBucket

- '/*'

InfraBuildPipelineCodeBuildCloudWatchLogsPolicy:

Type: AWS::IAM::Policy

Properties:

PolicyName: CodeBuildCloudWatchLogsPolicy-sls-deploy-infra-${self:custom.stage}-${aws:region}

Roles:

- !Ref InfraBuildPipelineServiceRole

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- logs:CreateLogGroup

- logs:CreateLogStream

- logs:PutLogEvents

Resource:

- arn:aws:logs:${aws:region}:${aws:accountId}:log-group:slsdeploy.com-infra/

- arn:aws:logs:${aws:region}:${aws:accountId}:log-group:slsdeploy.com-infra/:*

InfraBuildPipelineServiceRole:

Type: AWS::IAM::Role

Properties:

RoleName: codebuild-sls-deploy-infra-${self:custom.stage}-service-role

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service:

- codebuild.amazonaws.com

Action: sts:AssumeRole

Policies:

- PolicyName: InfraBuildPipelinesIAMPermissionsPolicy-sls-deploy-infra-${self:custom.stage}-${aws:region}

PolicyDocument:

Statement:

- Effect: Allow

Action:

- iam:Get*

- iam:List*

- iam:PassRole

- iam:CreateRole

- iam:DeleteRole

- iam:AttachRolePolicy

- iam:DeleteRolePolicy

- iam:PutRolePolicy

- iam:TagRole

- iam:UntagRole

Resource: arn:aws:iam::${aws:accountId}:role/codebuild-sls-deploy-infra-${self:custom.stage}-service-role

- PolicyName: InfraBuildPipelinesCodeBuildPermissionsPolicy-sls-deploy-infra-${self:custom.stage}-${aws:region}

PolicyDocument:

Statement:

- Effect: Allow

Action:

- codebuild:*

Resource:

- arn:aws:codebuild:${aws:region}:${aws:accountId}:report-group/sls-deploy-infra-${self:custom.stage}-*

InfraCodeBuildProject:

Type: AWS::CodeBuild::Project

Properties:

Name: sls-deploy-infra-${self:custom.stage}

Description: Builds the slsdeploy infrastructure for the ${self:custom.stage} environment

ConcurrentBuildLimit: 1

Environment:

ComputeType: BUILD_GENERAL1_SMALL

Image: aws/codebuild/standard:6.0

Type: LINUX_CONTAINER

LogsConfig:

CloudWatchLogs:

GroupName: slsdeploy.com-infra/

Status: ENABLED

StreamName: ${self:custom.stage}

ServiceRole: !Ref InfraBuildPipelineServiceRole

Source:

BuildSpec: "version: 0.2\n\nphases:\n pre_build:\n commands:\n - echo installing serverless\n - npm install -g serverless\n post_build:\n commands:\n - echo copying config file to locally\n - mkdir .config\n - aws s3 cp s3://${self:custom.commonBucket}/config/env.json ./.config/\n - echo config copying echo deploying serverless infrastructure\n - sls deploy --region ${aws:region} --stage ${self:custom.stage} --verbose\n - echo successfully deployed"

GitCloneDepth: 1

Location: https://github.com/daganherceg/sls-deploy-infra.git

Type: GITHUB

SourceVersion: ${self:custom.targetBranch}

Triggers:

Webhook: true

FilterGroups:

- - Type: EVENT

Pattern: PULL_REQUEST_MERGED

- Type: BASE_REF

Pattern: ${self:custom.targetBranch}

Visibility: PRIVATE

Artifacts:

Type: NO_ARTIFACTS

Deploy Steps

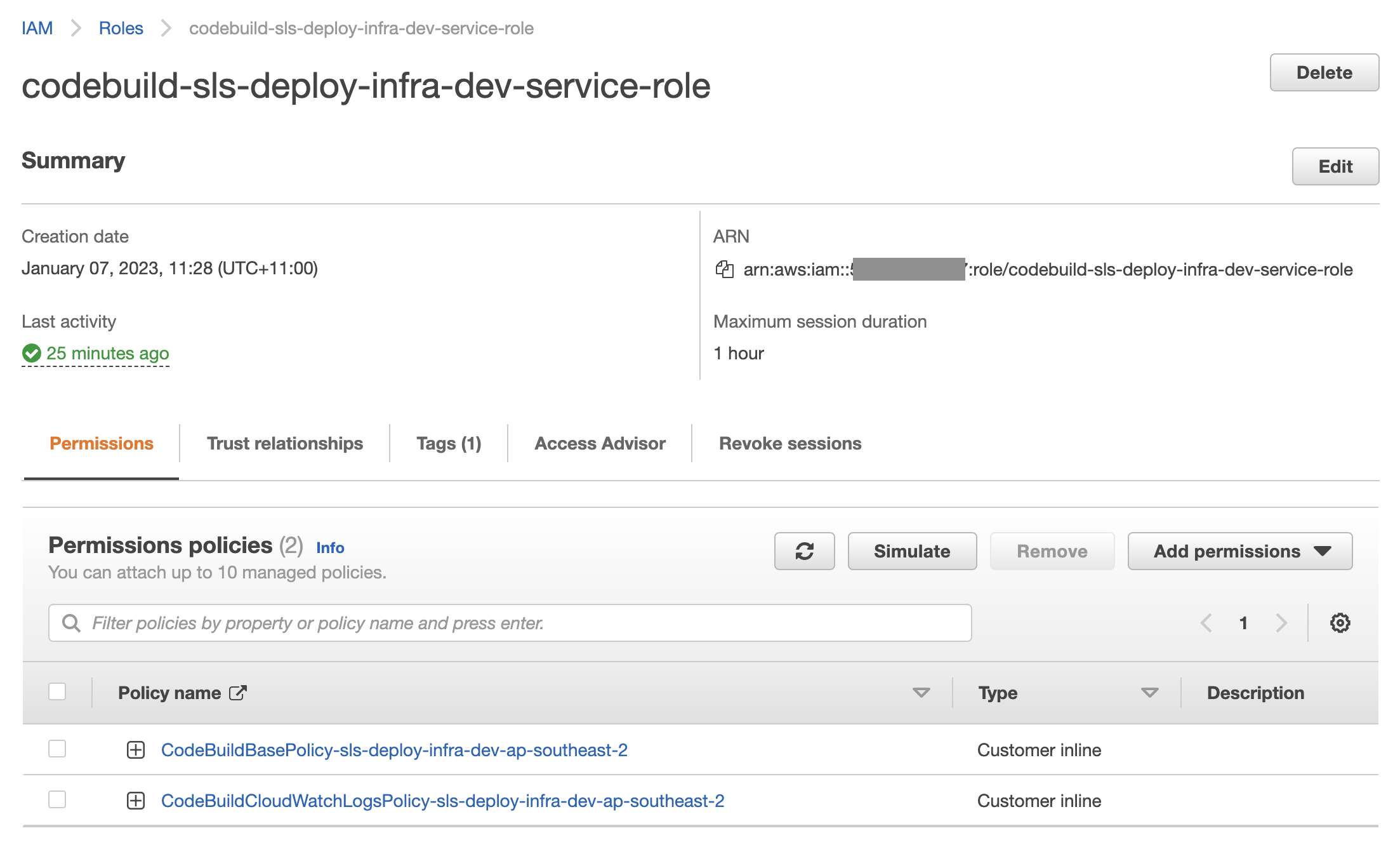

A bit of a chicken and an egg situation: in order for the CodeBuild pipeline to kick of the deployment and update the CloudFormation stack, the IAM permissions defined above need to be applied to the existing service role. Either update the IAM manually in the AWS Console or kick off a deployment from the command line as normal.

Another approach to this would be creating the IAM role manually or within the common resources stack and referencing it in the serverless template.

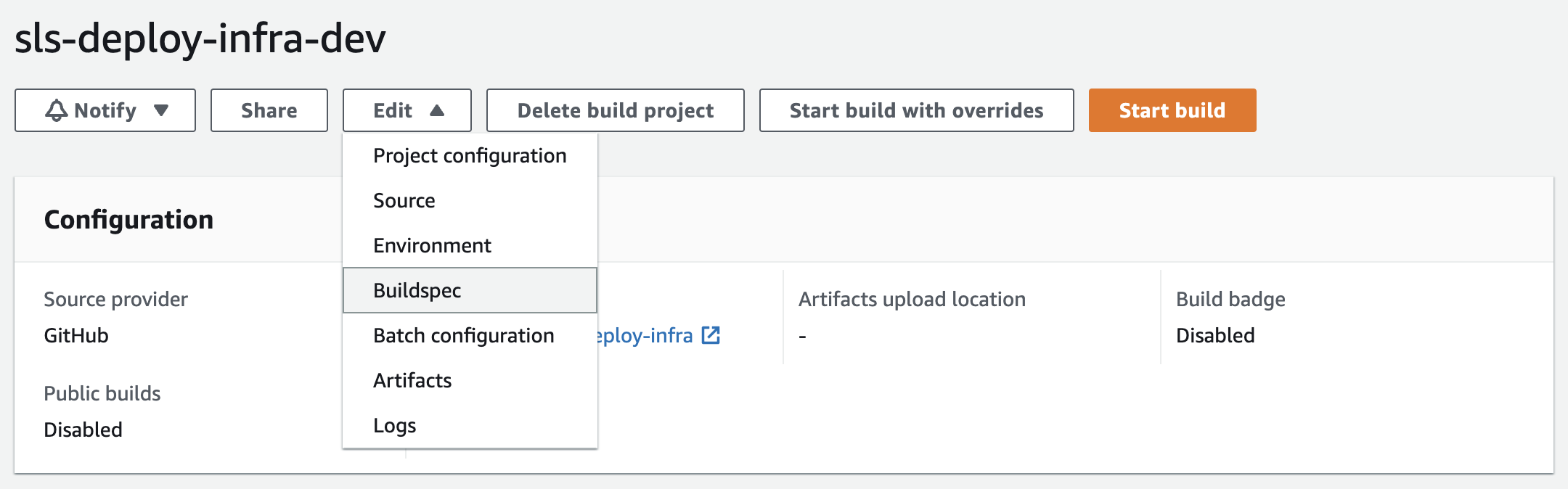

Update the Buildspec

It may be necessary to also update the buildspec manually in the CodeBuild console. I have noticed that in some instances CodeBuild uses the existing spec in the pipeline and have needed to update this manually. More investigation is required to determine the causes and a more stable way of updating the buildspec when required.

Merge Code to Deploy

Once the IAM is updated, future commits to a feature branch are and merging to release/develop and main in GitHub to kick off build.

[Troubleshooting] MalformedPolicy:

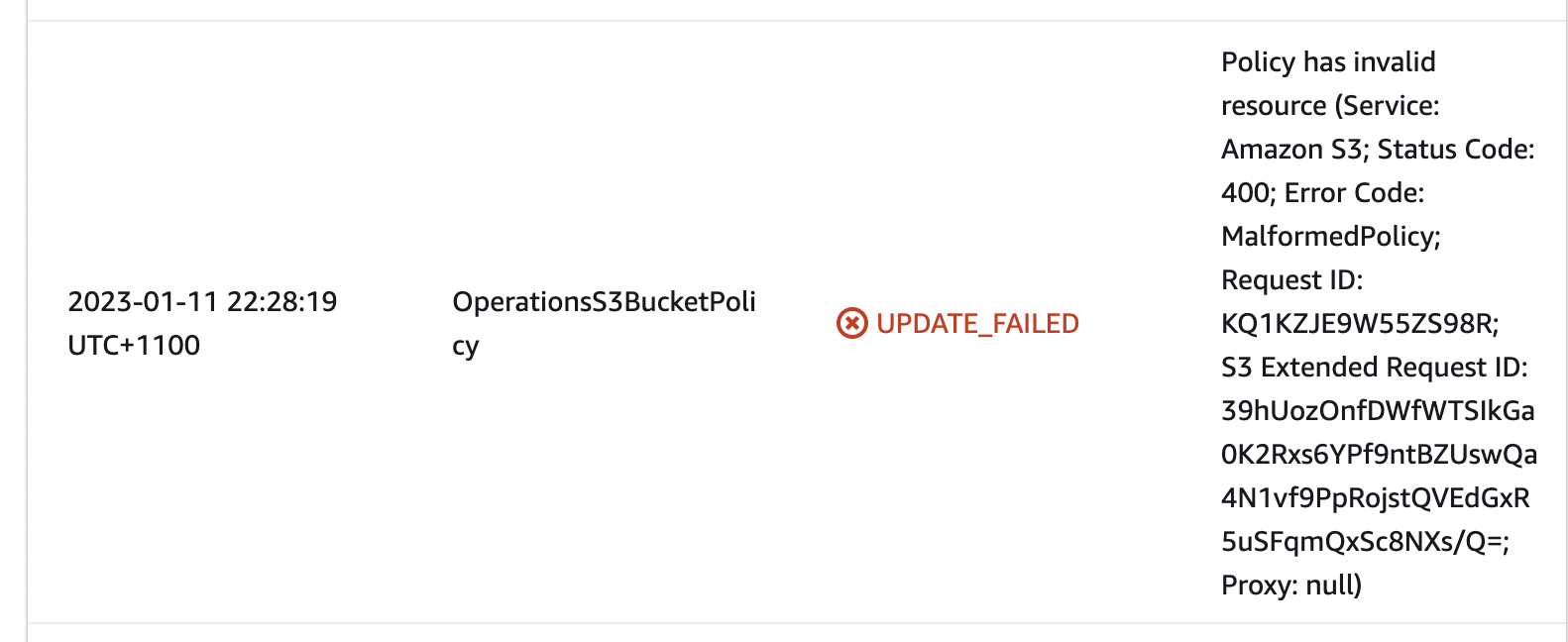

When getting the following error during deployment:

Error:

UPDATE_FAILED: OperationsS3BucketPolicy (AWS::S3::BucketPolicy)

Policy has invalid resource (Service: Amazon S3; Status Code: 400; Error Code: MalformedPolicy; Request ID: REQUEST_ID; S3 Extended Request ID: EXTENDED REQUEST_ID/Q=; Proxy: null)

Generally checking the CloudFormation event log in the CloudFormation Management Console is the first place to check.  However, in this instance, the logs do not provide much more information other than one of the resources is invalid.

However, in this instance, the logs do not provide much more information other than one of the resources is invalid.

Additionally, I generally also check CloudTrail which sometimes has a more meaningful error message. In this instance, not so much:

{

...

"errorMessage": "Policy has invalid resource"

...

}

The source of this error in one case was an incorrectly configured reference to the resources. Accidentally had targetBranch: ${self.custom.env.targetBranch} instead of targetBranch: ${self:custom.env.targetBranch}.

What's Next

The next post focuses on decoupling the front-end deployment depending on the concern. The deployment should either occur when the front-end website is updated or one of the blog posts is updated, deleted, or created. Additionally, we will also look at housing the blog posts in a central location to ensure that the blog articles is the same across all environments.